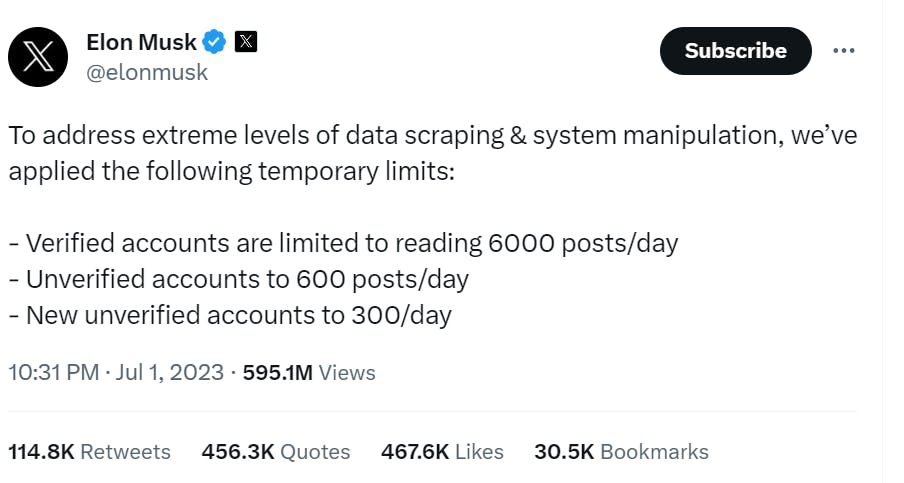

Rate Limiting in the Spotlight

Remember this intriguing tweet by Elon Musk discussing Twitter's controversial move of rate limiting the number of posts you can read per day? It sparked a heated debate. This post is not about the debate, It is about WHAT THE RATE LIMITING IS.

Let's dive deeper into the concept of rate limiting. What exactly is rate limiting, and why is it a critical mechanism for ensuring the optimal performance and fault tolerance of systems? Join us on this enlightening journey to unravel the secrets behind rate limiting

What is Rate Limiting:

Rate Limiting is a crucial process employed to control and restrict the number of requests received by a server. It plays a vital role in reducing the load on the application server, enhancing system fault tolerance, and maintaining high availability.

Benefits of Rate Limiting:

Improved Performance: Prevents server overload, ensuring a smooth user experience and faster response times, By regulating incoming requests.

High Availability: Mitigates the risk of server crashes due to excessive traffic, enabling the system to remain available to users even during peak times.

Fault Tolerance: With rate limiting in place, the system becomes more resilient to unexpected spikes in traffic, reducing the chances of service disruptions.

Efficiently Handling Burst Requests: Rate limiting effectively manages sudden surges in requests, preventing bottlenecks and maintaining consistent service quality.

Drawback:

While rate limiting offers significant advantages, it does come with certain limitations, such as the inability to serve all user requests during times of heavy restrictions.

Rate Limiting Algorithms:

A variety of algorithms exist to implement rate limiting. Some widely used ones include the Token Bucket algorithm, Leaking Bucket algorithm, and Sliding Window Log algorithm.

Exploring the Token Bucket Algorithm: Let's focus on the Token Bucket algorithm, a popular approach for rate limiting.

Visualize a bucket with a limited capacity to hold tokens(let's say 10 tokens). At regular intervals, the bucket is emptied to make space for new requests. Each time a request is received from a user, a token is figuratively dropped into the bucket.

When the bucket reaches its capacity (10 requests in this case), the user can no longer make additional requests until the bucket is refilled. For example, if the bucket is set to be emptied every day, the user can make 10 requests per day.

This is the essence of the Token Bucket Rate Limiting algorithm.

Ways to Implement Rate Limiting:

Rate limiting can be implemented either on the client side or the server side, or even in between these components. However, client-side rate limiting is generally not preferred, as it can be easier to circumvent by malicious users.

Conclusion:

Rate limiting is a powerful tool to ensure the smooth operation of applications and systems. By controlling the flow of incoming requests, it enhances performance, fault tolerance, and overall user experience. Choosing the right rate-limiting algorithm and implementation approach is crucial for achieving optimal results.